The Growing Field of Blockchain Forensics

Blockchain technology has been making waves in the tech industry since it first emerged with the development of Bitcoin in 2008. It offers a decentralized and secure way to store and transfer data, making it an attractive option for industries ranging from finance to healthcare. However, the anonymity and decentralization that make blockchain technology so appealing also make it an attractive target for criminals. This is where blockchain forensics comes in.

Blockchain forensics is the field of analyzing blockchain transactions to uncover illegal activities such as money laundering, drug trafficking, and cybercrime. It involves using various tools and techniques to analyze the blockchain and track down the individuals or organizations involved in these activities. The growing field of blockchain forensics is becoming increasingly important as more businesses and organizations adopt blockchain technology. According to a report by Grand View Research, the blockchain forensic market is expected to reach $11.6 billion by 2028, driven by increasing concerns over the use of cryptocurrencies in illegal activities.

One of the key tools used in blockchain forensics is blockchain analysis software. This software allows forensic investigators to track transactions on the blockchain and analyze the patterns and behaviors of users. By examining the transactions associated with a particular wallet address, investigators can often determine the identity of the owner or user of that address.

Another important aspect of blockchain forensics is the use of metadata. While the blockchain itself is anonymous, it is possible to gather metadata from other sources, such as social media or IP addresses, to link specific transactions to individuals or organizations. By analyzing this metadata, investigators can build a more complete picture of the individuals or groups involved in illegal activities.

The role of blockchain forensics is not limited to law enforcement agencies. Many businesses and organizations are also turning to blockchain forensics to ensure that their own blockchain networks are secure and free from illicit activity. By analyzing the behavior of users on their networks, businesses can identify potential vulnerabilities and take steps to prevent fraudulent activity.

Blockchain forensics also plays a key role in regulatory compliance. With the growing adoption of blockchain technology in various industries, regulators are increasingly concerned about the potential for cybercrime. By monitoring blockchain transactions and identifying suspicious behavior, regulators can ensure that businesses are complying with relevant laws and regulations.

Despite its growing importance, blockchain forensics is still a relatively new field, and there are many challenges to overcome. One of the biggest challenges is the lack of standardization in blockchain data. Unlike traditional financial transactions, blockchain transactions can take many different forms, and the data associated with these transactions can be difficult to interpret. Another challenge is the issue of privacy. While blockchain forensics can be a powerful tool for identifying criminal activity, it also raises concerns about privacy and civil liberties. As blockchain technology continues to evolve, it will be important to strike a balance between security and privacy.

The growing field of blockchain forensics is becoming increasingly important as more businesses and organizations adopt blockchain technology. With the potential for illegal activity on the blockchain, it is important to have the tools and techniques to analyze blockchain transactions and identify potential threats. While there are many challenges to overcome, the field of blockchain forensics will undoubtedly continue to grow in importance in the coming years.

Social Media Platforms’ Continuing Embrace of NFTs

It was almost one year ago that social media platforms such as Twitter began supporting non-fungible tokens (NFTs). NFTs are unique cryptographic tokens that cannot be replicated. They essentially show ownership of a certain digital item, like an image. Twitter, along with other platforms like Facebook and Instagram, have been working toward allowing their users to display NFTs as profile information. If you were to click on this information, you would be taken to a place where additional information is offered. This is very much a digital status representation that allows people to show off their membership or digital identity.

Reddit is the latest social media platform to embrace NFTs, with avatars available for purchase using fiat currency. Reddit requires a five percent portion of each transaction. However, Reddit refuses to acknowledge these NFTs for what they are. While they are part of the Polygon blockchain, they will be referred to as collectible avatars moving forward on this platform. Reddit has expressed that they view blockchain as a method of empowering and creating a more independent community on their site.

Facebook had begun allowing certain creators to showcase their NFTs in the summer of 2022 as well. This information is included on a digital collectibles tab in a user’s profile. This was shortly after Instagram (run by the same company) enabled NFTs to be shared, a surprising move given the fact that crypto products recently saw a decline in popularity. They had their worst performance ever in June 2022, when NFT sales only reached just over $1 billion.

In addition to social media platforms, there are many brands that are embracing NFTs, such as Gucci, Adidas, Nike and Bose. This is a very different concept, as awareness is made through different postings within channels, not with the use of ads.

In the future, we might see a shift away from branding as community becomes more important. Content is still very relevant, but there will also be more conversation between communities and within communities. Transparency will be necessary as a learning curve develops for people who are just embracing NFTs. Unless you know some elite creators and collectors, you may not have even noticed NFTs making their way onto these platforms, but you may start to see more and more of them popping up over time.

We’re at a point where people want to have ways of showing off their status to others. NFTs are just one new method of doing so. This is a way to display membership and status within different identity groups. The specific social media platform that you’re talking about utilizes NFTs in different ways.

On Instagram, status may be achieved by way of fashion or photo skills. Twitter is all about making a big statement using a small number of words. Your wit and your knowledge are important. TikTok focuses on video, music and performing. On a positive note, social media platforms’ continuing embrace of NFTs may encourage a lot more authenticity than we’ve seen recently. Instead of just posting photos that attempt to generate a certain status for oneself, there is a lot more proof that comes from the investment of NFTs.

How Blockchain Could Alter the World of Content Creation and Distribution

Spencer Dinwiddie has over the course of his eight seasons in the National Basketball Association (NBA) established himself as a solid player. But it is entirely possible that he will make an even greater impact off the court.

Dinwiddie, who began the 2022-23 season with the Dallas Mavericks (the fourth NBA team to employ him), co-founded the blockchain-based platform Calaxy.com in 2020 with tech entrepreneur Solo Ceesay. The site, which remains in the beta stage, is designed to provide fans greater engagement with content creators like Ezekiel Elliott, a running back with the Dallas Cowboys of the NFL, and R&B star Teyana Taylor.

The site is one of many to emerge in recent years, and a continuation of the trend that began with the emergence of non-fungible tokens (NFTs). It has become readily apparent that content creators around the world – and there are 50 million of them, by one estimate – can peddle their wares courtesy of blockchain, a decentralized, immutable online ledger that records transactions. Most often associated with cryptocurrency, it has shown that it has value in a great many sectors, including healthcare, elections, real estate and supply chain management.

In this case it affords artists, photographers, designers, bloggers and social media specialists a great opportunity to monetize their work, while at the same time protecting such creations from scammers and plagiarists. As Dinwiddie told the website For the Win in 2021:

“The people who generate the content are the ones generating the value. It’s the same thing with the NBA. There is inherent value for making a transaction seamless or making it so where you consume the content is easy to find. It’s very nice to know I can go to Barclays Center to watch (star players) Kevin Durant, James Harden and Kyrie Irving. But at the end of the day, they’re the ones who are creating the value and they deserve a lion’s share of the profit. The reason why things like that don’t typically happen is because the power has been in legacy systems.”

That power is becoming far more diffuse. Blockchain-powered platforms like Wildspark, Visme, Privi, Steemit, Theta and Pixsy protect content creators from bad actors by giving them a verifiable digital ID. There is no censorship, and such sites afford these creatives an opportunity to build an online community, given the direct access they have to their audiences.

But the big thing is monetization. Carolyn Dailey, founder of a site called Creative Entrepreneurs, told the website Courier.com that such platforms enable creators to “sell directly to their communities, without going through traditional ‘routes to market,’ (which) also take a cut of their profits.”

Dailey pointed out that there is no need to use galleries, music producers or record labels, while at the same time noting that the potential drawbacks are blockchain’s hazy future, investor uncertainty and the potential tax implications for creatives.

At the same time, the upside is readily apparent. One painter, Sasha Zuyeva, told Courier that the interest in his work has “clearly grown.” And Dinwiddie believes “the sky’s the limit.” So while it would be a mistake to believe blockchain can solve every problem faced by content creators, it certainly represents a giant leap forward.

The Unsettling Truth About The Worldwide Tech War

Even before the economic turmoil now exacerbated by the Russian-Ukrainian war, the U.S. and China have been locked in a technology battle that has raised concerns not only in Washington, DC but throughout the country over economic competition and national security. While the U.S. leads in software and semiconductors, China clearly comes out on top when it comes to smartphones and the 5G network, as well as in artificial intelligence (AI), machine learning, and a wealth of other technologies. As the Chinese technology market increasingly distances itself from the West, this feeds existing tensions between China and the U.S.

According to a post on The Diolomat.com, the U.S. government, in an effort to constrain or relay China’s technology advancements, has taken a series of measures and sanctions against Chinese tech companies, since President Biden took office, Congress, the government, and several key think tanks have released 209 bills, policies, and reports concerning science and technology policies toward China. Huawei, one of China’s preeminent communications manufacturers, is one of the largest targets of U.S. sanctions. In 2020, a new regulation prohibits any entity from supplying chips with U.S. technology to Huawei, yet the company continues to generate revenue. The diversity of the business environment reflects the complexity of the China-U.S. economic and trade relationship. While the two countries are independent, nonetheless, they cannot completely sever ties, and economic and trade sanctions will bring huge losses to both.

Jacob Helberg, who led Google’s global internal product policy efforts to combat disinformation from 2016 to 2020, has written a book entitled “The Wires of War: Technology and the Global Struggle for Power,” in which he calls the power struggle between China and the U.S. a “gray war.”

Helberg told Axios.com there is a battle to control what users see on their screens, including information and software, and a “backend” battle to control the internet’s hardware, including 5G networks, fiber-optic cables, and satellites. He believes that this technology-driven war will influence the balance of power for the coming century, as without a solid partnership with the government, technology companies are unable to protect democracy from autocrats looking to sabotage it, from Beijing to Moscow and Tehran. To win this skirmish, Helberg suggests using trade policy and alliances to form a free and secure internet and information infrastructure, and the capability to levy what he calls “cyber sanctions” that restrict access to technologies and platforms controlled by hostile foreign governments.

America may believe that it can maintain its technological edge, but China is spending a significant amount of money on high-tech research. According to a post on ABC.net, China has announced a five-year plan worth $1.8 trillion to monopolize AI, robotics, 6G, and other new technologies by 2035.

James Green, former minister for trade affairs at the U.S. embassy in China, predicts that the tech war that grew during the Trump administration is not going to be resolved any time soon.

“Some of the issues, particularly around technology and technology ecosystems, are ones that will be with us for years to come,” he says.

How AI is Impacting Data Centers

In 2018, Gartner Distinguished VP Analyst David Cappuccio wrote a piece entitled “The Data Center is Dead,” in which he predicted that because of the continuing rise of such things as cloud providers, Software as a Service (SaaS) and edge services, some 80 percent of enterprises will shut down their traditional data centers by 2025.

Certainly the world’s seven million data centers, labelled “the building blocks of our online world” in an October 2020 piece on the website Bigstep.com, have continued to evolve since then. That is in no small part due to the pandemic, which has accelerated automation. Artificial intelligence is central to that, as it makes it possible to detect possible risks, heighten energy usage, ward off cyberattacks and even bolster on-the-ground security.

AI is, in the words of Jensen Huang, CEO of the computer systems design services company Nvidia, “the most powerful technology force of our time,” and other business leaders appear to have taken that to heart. Some 83 percent of organizations increased their AI/machine learning budgets from 2020 to 2021, well aware of the benefits AI can provide. As it was put in one blog post, no other technology improves the efficiency of a data center to quite the degree of AI. That was demonstrated by MIT researchers who devised an AI system that hastened processing speeds by as much as 30 percent, and by an HPE deep-learning application that identified and resolved bottlenecks.

Some of the most promising uses of AI to date have come in the area of energy conservation, which is of no small concern, considering data centers consume about three percent of the world’s electricity, and produce about two percent of its greenhouse gasses. And with more and more data being churned out by the year, it is estimated that data centers will gobble up 10 percent of the world’s energy by 2030.

Google showed how AI can be used to curtail this trend, when in 2014 it used its Deepmind AI to reduce the energy used for cooling by 40 percent at one of its data centers, and achieve an overall reduction in power usage effectiveness (PUE) overhead of 15 percent.

AI also defends against cyberthreats by discerning any changes in normal network behavior, and can even powers robots that patrol the grounds outside data centers.

It is inarguable, then: AI usage in data centers is on the rise, and as mentioned that is part of the larger trend toward automation. According to Mordor Intelligence, the data center automation market, which stood at $7.34 billion in 2020, is expected to be valued at $19.65 billion by 2026, a compound annual growth rate of 17.83 percent.

While Dave Sterlece, an executive at the automation company ABB Ltd., told Datacenterdynamics.com that such innovation is “not hugely widespread yet,” he called that which has emerged to date “exciting” and added, “The potential is there.”

It seems certain to be realized in the years ahead, as data centers continue their evolution, and continue to power the online world.

A Breakthrough in Graphene-Based Water Filtration

Two new graphene-related developments this year — one by Brown University researchers in January and another by MIT researchers in August — showed that this one-atom-thick layer of carbon might offer promise as a means of water filtration. And if it someday proves scalable, it could go a long way toward alleviating the worldwide water shortage, and countering mankind’s unrelenting befouling of the planet’s waters.

The Brown team discovered an inventive way to vertically orient the nanochannels between two layers of graphene, thus making those channels passable for water molecules but little else. These channels normally have a horizontal orientation, but the researchers found that by taking the graphene, stretching it on an elastic substrate and then releasing it, wrinkles could be formed.

Epoxy was then applied to hold this material — labelled VAGME (vertically aligned graphene membranes) by the team — in place. Brown engineering professor Robert Hurt, who co-authored the research, summarized these findings as follows, in a release on the university’s website:

“What we end up with is a membrane with these short and very narrow channels through which only very small molecules can pass. So, for example, water can pass through, but organic contaminants or some metal ions would be too large to go through. So you could filter those out.”

The MIT team, meanwhile, found that graphene oxide foam, when electrically charged, can capture uranium and remove it from drinking water. This is a particularly valuable discovery, given the fact that uranium is continually leaching into reservoirs and aquifers from nuclear waste sites and the like, a development that can lead to various health issues among humans.

MIT professor Ju Li noted in a release on the MIT website that this filtration method can also be used for metals such as lead, mercury and cadmium, and added that in the future passive filters might give way to smart filters “powered by clean electricity that turns on electrolytic action, which could extract multiple toxic metals, tell you when to regenerate the filter, and give you quality assurance about the water you’re drinking.”

Graphene oxide has also been used by a British company, G2O Water Technologies, to enhance water filtration membranes, and led in July of this year to that organization becoming the first of its type to land a commercial contract.

The effectiveness of graphene-oxide membranes was first seen in 2017 at the UK’s University of Manchester, when researchers discovered that they could not only sift out impurities, but also salt — meaning that it could potentially be used to desalinate seawater.

As of 2018, just 300 million people around the world obtained at least some of their drinking water from desalination, and the process is often seen as costly and energy inefficient. Researchers at Purdue University announced in May of this year, however, that they had developed a more energy-efficient method of reverse osmosis, the most widely used type of desalination, a promising advancement indeed.

There is also the issue of pollution, which as mentioned is a considerable one. Studies have shown that 80 percent of the world’s wastewater is dumped back into the environment, and that little of it is treated — that it could be fouled by the aforementioned metals, as well as nutrients from farm runoff, plastics, etc.

There is no simple way to deal with such a deep-seated problem, but certainly graphene offers a potential solution.

Are NFTs Worth the Environmental Cost?

Not that any further proof was needed, but the night of March 27, 2021 offered definitive evidence of the impact non-fungible tokens (NFTs) are making — that besides transforming the world of digital art, they are even veering toward the mainstream.

On that night’s edition of “Saturday Night Live,” cast members Pete Davidson and Chris Redd joined musical guest Jack Harlow in a parody music video inspired by the song “Without Me,” by the rapper Eminem. And their subject matter was in fact NFTs, which were described as “insane,” since they are “built on a blockchain.”

“When it’s minted,” Harlow sang, “you can sell it as art.”

It was breezy and funny and maybe even a little educational. Who knew that we could be entertained while learning about digital tokens (essentially certificates of ownership) that allow artists to peddle their virtual wares via blockchains?

The reality about NFTs is far from a laughing matter, however, and from an environmental standpoint, even somewhat dire. Yes, NFTs open digital frontiers to artists often shut out of the legacy market, a $65 billion business where artists are able to sell their creations.

That said, things have meandered in curious directions, since these tokens can be assigned to any unique asset, including the first tweet by Twitter head Jack Dorsey, a rendering of Golda Meir, the late Israeli prime minister, and even a column on NFTs by the New York Times’ Kevin Roose. A major league baseball player named Pete Alonso, first baseman for the New York Mets, has even issued one, in hopes of providing financial support to his minor league brethren.

But as mentioned, the considerable downside is the carbon footprint made by NFT transactions. Memo Akten, an artist and computer scientist, calculated in December 2020 that the energy consumed by crypto art is equivalent to the amount used during 1.5 thousand hours of flying or 2.5 thousand years of computer use.

Others in the field have called NFTs an “ecological nightmare pyramid scheme,” and a recent post on the website The Verge explained why: The marketplaces that most often peddle NFTs, Nifty Gateway and SuperRare, use the cryptocurrency Ethereum, from the platform by the same name. This decentralized digital ledger uses “proof of work” protocols, which require users (a.k.a., “miners”) to solve arbitrary mathematical puzzles in order to add a new block, verifying the transaction.

This process is energy inefficient, and purposely so. The thinking is that hackers will find that this energy expenditure will not be worth their while — i.e., it acts as something of a security system, since these ledgers are not subject to third-party control, which would handle things like warding off cybercriminals.

Akten is of the opinion that a “radical shift in mindset” is in order, and writes on the website FlashArt that the future Etherum 2.0 represents a step in that direction, as it uses energy-efficient “proof of stake” protocols, where mining power is based on the amount of cryptocurrency a miner holds.

“That would essentially mean that Ethereum’s electricity consumption will literally over a day or overnight drop to almost zero,” Michel Rauchs, a research affiliate at the Cambridge Centre for Alternative Finance, told The Verge.

There are those who caution, however, that the full potential of Ethereum 2.0 is “still years away” from being realized, and others who believe that its shift toward a proof-of-stake model will lead to the platform’s demise.

There is a solution available now! Bitcoin Latinum has brought that green Proof of Stake to the Bitcoin ecosystem and is available for minting on platforms like the Unico NFT platform.

There also are other options. There is the practice of lazy mining, where an NFT is not created until its initial purchase. There are sidechains, where NFTs are moved onto Ethereum after they are minted on proof-of-stake platforms. There are bridges, which involve interoperability between blockchains.

As with everything else, there is also the possibility of using clean energy sources in the mining process. According to a recent post on Wired, they could power 70 percent of those operations. The counterargument is that if clean energy is used in that fashion, less of it will be available to fulfill other demands.

There are also those, like Joseph Pallant, founder and executive director of the Vancouver, BC-based nonprofit Blockchain for Climate Foundation, who believe that the solution to the problem lies with Ethereum’s continuing evolution. Pallant also wonders if the platform’s energy usage is overblown.

That would appear to be a minority opinion, however. Most observers believe NFTs, for all the opportunities they offer artists, need to become a better version of themselves — that their energy needs will need to be addressed in some fashion. More than likely, some combination of the above solutions will prove effective, but whatever the case, the need is clear. This is a problem that isn’t going away, and it will require some degree of resourcefulness to solve it.

Data, the “New Oil,” Cannot Be Used If Left Unrefined. Thankfully, AI Can Help.

British data scientist Clive Humby was famously quoted in 2006 as saying that “data is the new oil,” a statement that has been over-analyzed and occasionally criticized, but one that nonetheless retains its merits all these years later.

Soon after Humby made that statement, Michael Palmer, executive vice president of the Association of National Advertisers, amplified the point in a blog post, writing that data cannot be used if it is left unrefined. Data, he asserted, is not fact, any more than fact is insight. Context is vital in order to draw conclusions from any information that is gathered. And, he added:

The issue is how do we marketers deal with the massive amounts of data that are available to us? How can we change this crude into a valuable commodity – the insight we need to make actionable decisions?

These questions are even more consequential nowadays, given the exponential increase in the amount of data in recent years. According to Statista, some 74 zettabytes of data will be created, copied, captured, or consumed around the world in 2021, 72 more than in 2010. Even more tellingly, this year’s total is expected to double by 2024.

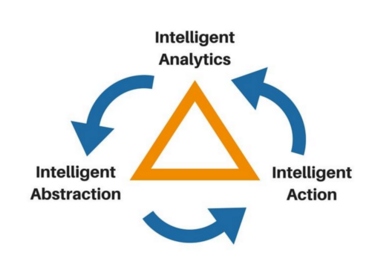

As a result, it is incumbent upon organizations to employ tools like artificial intelligence and machine learning, which enable them to ingest and process all this information, and in turn, gain insights that will make possible better decision-making and ultimately improve the bottom line.

Specifically, the convergence of Big Data and AI enables integration and management to be automated. It allows for data to be verified, and some advanced AI even makes it possible to access legacy data. The resulting increase in efficiency could lead, according to a 2018 McKinsey study, to the creation of between $3.5 trillion and $5.8 trillion per year in value — and perhaps as much as $15.4 trillion — across no fewer than 19 business areas and 400 potential use cases. McKinsey also concluded that nearly half of all businesses had adopted AI, or were poised to do so.

They include, not surprisingly, tech giants like Amazon, Microsoft and Netflix, but also such widely divergent organizations as BNP Paribas, the world’s seventh-largest bank, and the energy company Chevron, among many others.

BNP Paribas Global Chief Information Officer Bernard Gavgani described data as being “part of our DNA” in an interview with Security Boulevard and noted that between September 2017 and June 2020, the bank’s use cases increased by 3.5 times. The goals, he added, were to improve workflows and customer knowledge, two examples being a scoring engine that enables the bank to establish credit and an algorithm that increases marketing efficiency.

Chevron, meanwhile, increased its productivity by 30 percent after the implementation in 2016 of a machine learning system that was able to pinpoint ideal well locations by incorporating data from the performance of the company’s previous wells. More recently, the company entered into a partnership with Microsoft, enabling them to use AI to analyze drilling reports and improve efficiency.

The latter is proof that data is not only the new oil, but that it can help find it, too. But the larger point is something else Palmer mentioned in his follow-up to Humby’s long-ago assertion — that data is merely a commodity, while insight is the currency an organization can use to drive growth. You need the best tools to drill down and gain that insight, and in this day AI and ML represent the best of the lot.

Data Centers Clean Up Their Act (and Find a New Rhythm)

The 2017 song “Despacito,” by Puerto Rican artists Luis Fonsi and Daddy Yankee (and later re-mixed with no less a talent than Justin Bieber), remains one of the most popular songs of all time.

Its music video surpassed three billion YouTube views in record time, and by the end of 2019 it had been viewed no fewer than six billion times. Vulture called the song, the title of which means “slowly” in English, “a sexy Spanish sing-along” featuring “catchy refrains and (an) insistent beat.” And according to NPR it is “the culmination of a decade-long rise of sociological and musical forces that eventually birthed and cemented a style now called urbano.”

It is also, sadly, a contributor to the worldwide environmental crisis. Fortune noted last year that a mere Google search for “Despacito” activates servers in six to eight different data storage centers around the globe, and that YouTube views of the video — which were at five billion at the time the piece appeared — had consumed the energy equivalent of 40,000 U.S. homes in a year.

In all, data centers, the very backbone of the digital economy, soak up about three percent of the world’s electricity, and produce about two percent of its greenhouse gasses. And with data exploding — there were 33 zettabytes of it in 2017, and there are expected to be 175 by 2025 — these issues are only expected to become more acute. It is estimated that by 2030, data centers will consume 10 percent of the world’s energy.

“Ironically, the phrase ‘moving everything to the cloud’ is a problem for our actual climate right now,” said Ben Brock Johnson, a tech analyst for WBUR, a Boston-based NPR affiliate.

Thankfully, tech giants are already in the process of dealing with the problem. Sustainable data centers are not only a thing of the future; they are a thing of the present. In other words, the cloud has become greener, in the hope that it will become greener still.

That’s reflected in the fact that Microsoft’s stated goal is to slice its carbon emissions by 50 percent in the next decade; that Facebook bought more renewable energy than any other company in 2019 (and was followed by Google, AT&T, Microsoft and T-Mobile, in that order); that in April of this year Google introduced a computing platform it labelled “carbon intelligent;” and that Amazon Web Services hopes to be net-carbon-zero by 2040, a decade earlier than mandated by the Paris Agreement.

As Microsoft president Brad Smith told Data Center Frontier, his company sees “an acute need to begin removing carbon from the atmosphere,” which has led it to compile “a portfolio of negative emission technologies (NET) potentially including afforestation and reforestation, soil carbon sequestration, bioenergy with carbon capture and storage (BECCs), and direct air capture.”

The initial focus, Smith added, will be on these “nature-based solutions,” with “technology-based solutions” to follow. The net effect will be the same, however — a greener cloud. It comes not a moment too soon, as reflected in the aforementioned statistics, as well as the fact that the U.S. alone was responsible for about 33 percent of total energy-related emissions in 2018, or that by 2023 China’s data centers are expected to increase energy consumption by 66 percent.

How it happens

As mentioned, the commonplace use of technology in our society, while seemingly harmless, contributes to these emission levels. Everything from watching that “Despacito’ video to uploading a picture to scrolling through your Twitter feed involves data centers.

While electricity might on the surface seem distinct from other emission sources, the reality is that they are as much fueled by the same resources as other industries. Coal, natural gas, and petroleum are the primary resources used to power electricity. For example, according to a Greenpeace study, 73 percent of China’s data centers are powered by coal.

But it’s not just the fact that data centers require electricity to run. The sheer amount of energy produced by data centers means that they produce a lot of heat, and cooling systems must be put in place to counteract that.

On average, servers need to maintain temperatures below 80 degrees to function properly. Often, cooling makes up about 40 percent of total electricity usage in data centers. So altogether, the reliance on fossil fuels and nonrenewables alongside necessary cooling systems are what ultimately cause the emissions from data centers.

Where to go from here

Many top-tier tech companies have been taking their cues from Susanna Kass, a member of Climate 50 and the data center advisor for the United Nations Sustainable Development Goals Program. Blessed with 30 years of data-center experiences herself, Kass applauds the sustainability initiatives launched by such companies, and believes there will in fact be an escape from the “dirty cloud,” as she calls it, that has enveloped the industry.

In addition to the aforementioned steps she said these centers need to curtail the practice of over-provisioning, which commonly involves providing one backup server for every four that are running. She adds that coal obviously needs to be phased out as a power source for these centers, and that carbon neutrality must continue to be the top priority.

“The goal,” she told The New Stack, “is to promote better digital welfare as we evolve into the digital age.”

Indeed there is no other choice, given the fact that recent reports indicate we only have until 2030 to stop the climate catastrophe. With great (electric) power, comes great responsibility, and it seems the tech giants are now heeding that call.

What Makes a Great Nanomaterial?

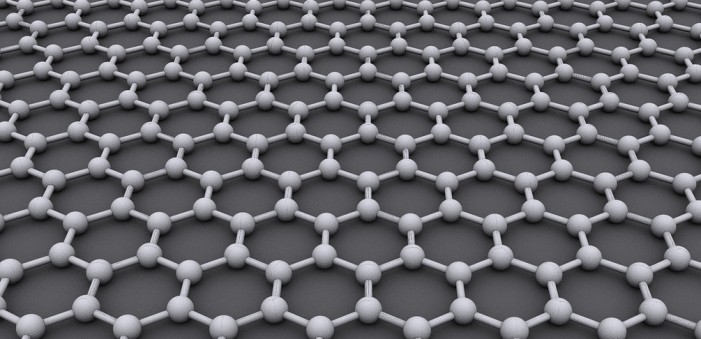

By this point, we have discovered a slew of natural, incidental, and artificial nanomaterials with a wide variety of properties and capabilities. Particularly prominent are graphene and borophene — i.e., one-atom-thick layers of graphite and boron, respectively — which have been widely hyped in recent years.

Graphene, discovered only in 2004, has alternately been labelled “the most remarkable substance ever discovered” and a substance that “could change the course of human civilization.” Not to be outdone, borophene, first synthesized in 2015, has been dubbed “the new wonder material,” as it is stronger and more flexible than even graphene.

Certainly there is a place for both in a wide variety of areas (not the least of which are areas like electronics and robotics), but the full extent of their capabilities is still being explored. And certainly their versatility has set them apart from other nanomaterials, like nanoenzymes, which have found particular application in the medical field (specifically, in tasks like bioimaging and tumor diagnosis), or the membranes that are used for water purification.

Yet, these nanomaterials (and many others) have yet to reach their full potential.

What factors determine the effectiveness of a nanomaterial for human use? What makes a truly great nanomaterial? The answer largely comes down to the material’s capability, functionality, and scalability.

Capability

How important a nanomaterial’s capabilities actually are depends on how it can be applied, and seeing as how the range of applications for various materials is endlessly vast, you could very well say that any nanomaterial is more than capable of fulfilling some kind of function. But ultimately, utilizing that many nanomaterials is more cumbersome and inefficient than anything — meaning that by discovering and utilizing a few nanomaterials with strong and multifaceted capabilities, we can more rapidly make advancements and create new nanotech.

The most produced nanomaterials to date are carbon nanotubes, titanium dioxide, silicon dioxide and aluminum oxide, and are great examples of varied capability. Carbon nanotubes are most often used in synthetics. Titanium dioxide is used for paints and coatings, as well as cosmetics and personal care products. Silicon dioxide is used as a food supplement, and aluminum oxide is used in various industries.

The distinction for these four nanomaterials is that while their use is widespread, their capabilities pale in comparison to other materials. For instance, graphene and borophene have much greater potential in not only the aforementioned fields but also medicine, optics, energy, and more. Graphene and borophene truly demonstrate what a great nanomaterial’s capabilities should look like.

Functionality

It’s one thing to have the capability for greatness, but it’s another thing to be able to carry it out. This is where nanomaterials are put to the test, to see if they can integrate well into our technology and products in order to improve them. In most cases, nanotech and other advancements won’t be comprised solely of the specific nanomaterial, meaning that making sure it functions properly alongside other materials and in composite forms is essential.

This is where nanomaterials begin to have trade-offs. Titanium dioxide, zinc oxide and silicon dioxide are all utilized effectively and with ease, with very few issues related to function. However, stronger materials tend to have more unstable qualities: borophene in particular is susceptible to oxidation, meaning that the nanomaterial itself needs to be protected, making it difficult to handle. Graphene lacks a band gap, making it impossible to utilize its conductivity in electronics without some way to control it. Without overcoming such hurdles for implementation and functionality, these powerful nanomaterials will remain at arm’s length of greatness.

Scalability

Even once a nanomaterial is able to make the cut and perform well, the final hurdle they must pass is scalability and mass production. After all, part of the appeal of these technological advancements would be its widespread use. While nanomaterials like titanium dioxide, zinc oxide, and silicon dioxide have had no issue with production and subsequent use, their issues lie elsewhere.

There were long-standing questions about the scalability of graphene — particularly the costs involved — but there is now greater optimism on that front. A 2019 study predicted, in fact, that worldwide graphene sales would reach $4.8 billion by 2030, with a compound annual growth rate of 45 percent.

Borophene has likewise faced scalability issues, though in the case of that nanomaterial it has centered more on the production of mass quantities. (It was judged as a major breakthrough, for example, when Yale scientists produced a mere 100 square micrometers of the substance in 2018. Efforts continue on that front, however, and much was learned from scaling up graphene. So it would appear to be only a matter of time.

If and when scalability is achieved, it seems safe to say that the full potential of both nanomaterials can be explored. We know they are versatile, but at that point, we will truly find out how versatile they can be.

Data Storage in 2021: What Lies Ahead

The forecast for 2021 in data storage is continued cloudiness, with increased edginess and integration.

The cloud is everything when it comes to enterprise data storage and usage; fully 90 percent of businesses were on the cloud as of 2019, and 94 percent of workloads will be processed there in 2021. But that only begins to tell the tale. The coronavirus pandemic brought about an increased need for agility and interoperability between systems in 2020, and that promises to continue, and then some.

With the pandemic raging on and remote work a necessity at many firms in the months ahead, we will see an accent on things like multi-cloud storage, all-flash storage and serverless storage, with an eye in the years ahead on edge computing. We will also see an ongoing need for integration tools like knowledge graphs and data fabrics.

The reality, as Marketwatch reported, is that Big Data serves as the spine of Big Business. As a result it must always be strengthened, so that it may meet the ever-changing needs of a community that has faced massive disruption during the healthcare crisis, and which will be forced to adapt to the ongoing data explosion.

The total amount of data created, captured or copied in the world (called the DataSphere) stood at 18 zettabytes in 2018, and is expected to reach as many as 200 zettabytes by 2025 (up from previous estimates of 175). In 2020 alone, some 59 zettabytes are expected to fall into one of these three categories, with a sizable sliver in the enterprise realm. As a result, roughly $78 billion is expected to be spent on data storage units around the globe in 2021.

The Data Analytics Report noted that artificial intelligence has always had a considerable impact on enterprise data storage, and will continue to do so. In particular, related technologies like machine learning and deep learning enable companies to integrate data among various platforms.

In addition, all-flash storage has become an appealing option, because of its high performance and increased affordability. Also coming into vogue is serverless computing, where a vendor provides the infrastructure but users are free to use it as they see fit.

In addition, multi-cloud offerings, which allow for data management across various on-premise and off-premise systems, are in the offing. And it is with this storage method that knowledge graphs (data interfaces) and data fabric (the architecture that facilitates data management) come into play.

Still ahead is a pivot toward edge computing, which allows for enhanced convergence with the cloud, and the distributed cloud, where services are operated by a public provider but divided between different physical locations.

The point is, the evolution of enterprise data storage, a challenge accelerated by a health and economic crisis, is ongoing. That is unlikely to end any time soon, given the explosion of data — and new solutions, which we can only begin to contemplate, are certain to arise in the years ahead.

Researchers Are Borrowing Inspiration from the Human Body to Filter Sea Water

The global water crisis is fast becoming acute. Due to pollution and other environmental factors (not the least of which is global warming), some 1.1 billion people currently lack access to clean water, and another 2.7 billion face a shortage of one month or more every year.

Worse, the World Wildlife Foundation estimates that 67 percent of the planet’s population could be facing a water shortage by 2025.

Clearly drastic steps are in order, and one possibility is the purification of seawater. While traditional methods of doing so are sorely inefficient, researchers have discovered a promising new method that may prove utterly revolutionary.

The key? Mimicking the way that human bodies transport water within their cells.

Mimicking the Functions of Aquaporins

The highly sustainable water filtration method is being researched and developed at the Cockrell School of Engineering, a branch of the University of Texas. At first, the research team was trying to mimic the functions of proteins called aquaporins, which are found in cell membranes. Aquaporins act as channels for the transfer of water within the cell and across cell membranes. The team developed a network of synthetic cell membranes that included synthetic protein structures very similar to genuine aquaporins.

The hope was to copy the way aquaporins in cell membranes transport water. Aquaporins fashion pores in the membranes of cells in organs of the body where water is needed the most. These organs include the eyes, lungs and kidneys. The team of researchers wanted to build upon this concept as a sustainable way of filtering water and removing the salt content from seawater, a process called desalination.

Better Than Expected

They were not, however, as successful at mimicking this process as planned. The individual membranes didn’t work well alone. However, when several of them were connected in a strand, they were more effective at transporting and filtering water than previously hoped. The research team has dubbed these membrane strands “water wires.” You could think of this chain of membranes as transporting water molecules as fast as electricity travels through a wire.

These membranes remove salt from water so effectively that they could be used to develop a desalination process to replace current methods, which are expensive and inefficient. This new method would make desalination 1,000 times more effective than traditional methods are. Water could be purified on a large scale faster than ever to meet the demands of the world’s growing population.

Implications of Water Unsustainability

Although water is this planet’s most plentiful resource, not that much of it is fresh water that can be used for drinking and farming. Only three percent of the earth’s water is freshwater, and as noted above, this has major implications for the rising global population, which stood at 7.8 billion as of October 2020, and is projected to near 9 billion by 2035.

China (1.4 billion) and India (1.3 billion) have the most people, while the U.S. has over 330 million.

In 2015 the United Nations established clean water as one of its 17 sustainable development goals, the specific aim of which is to ensure that everyone on the planet has access to clean water by 2030. That means taking such steps as reducing pollution, increasing recycling and using water more efficiently, among other steps.

Many, many other organizations are tackling this problem, as it is something that defies solution by a single body. It is critical that these organizations do so, given that water is essential to human life, essential to our very survival.

How Blockchain is Transforming Gaming (and Vice Versa)

In 2017, the game CryptoKitties made waves throughout niche gaming communities online. Axion Zen, the developer of the game, introduced the concept of unhackable assets. Players are able to purchase, sell, and breed kittens that are virtually uncompromisable.

CryptoKitties was built on Ethereum, the most popular decentralized platform for storing bitcoins and completing transactions. At one point, the game was so popular a single virtual kitten was sold for more than $100,000 and Ethereum experienced regular slowdowns.

Blockchain may just transform the gaming industry as we know it.

For one, blockchain allows developers to create truly “closed ecosystem” gaming environments, which reduces the influence of so-called “gray trading,” thereby fostering a higher level of trust in the gaming space.

Moreover, gamers can buy assets in the game using their cryptocurrency directly, which makes the process of handling actual money more rapid and secure. In-game items can be immutably owned by users, which solves the untenable problem of digital theft and hacking.

Even more interestingly, blockchain could blur the lines between separate digital worlds. Due to the distributed nature of blockchain, developers could enable the ability to transfer items between distinct game universes, in essence enabling a sort of digital multiverse. The potential for game world fluidity could completely shift the insular way that game studios operate.

Though CryptoKitties is recognized as the world’s first blockchain-based game, developers have gone on to develop specialized frameworks exclusive to gaming. Many games use the dGoods protocol, which is a token-based platform similar to Ethereum.

In many ways, the blockchain and gaming partnership is critical, not necessarily for the future of gaming, but more so for the future of blockchain. Put simply, gaming is the first actual use case for widespread blockchain adoption.

“Gaming does not need blockchain. Blockchain needs gaming,” Josh Chapman, partner at the esports firm Konvey Ventures, stated. “Blockchain will only (see widespread) adoption and application once it provides significant value to the gaming ecosystem.”

From 2017 to 2018, blockchain investments saw a 280 percent increase. By 2019, companies were already actively investing gaming enterprises employing blockchain methods: A company dubbed Tron invested $100 million towards a special gaming fund for games producers interested in utilizing blockchain technology.

Widespread adoption in the gaming industry may just signal that the blockchain is here to stay, and play.

A More Environmentally Friendly Battery

Though small in size, batteries play an outsized role in today’s society, powering everything from smartphones to electric cars. And as the world’s population continues to grow, so too will the need for batteries, which will likely lead to some thorny issues. Improper disposal of lithium-ion batteries can harm humans and wildlife alike, since those batteries leach off toxic chemicals and metals over time. In addition, today’s battery production process tends to be cost-ineffective.

Aluminum batteries can address both problems, as their material costs and environmental footprint are significantly smaller than those of traditional batteries.

While aluminum batteries have been around for some time, the current incarnation contains a carbon-based anthraquinone instead of the graphite-based cathode. With this new carbon-based method, the electrons are absorbed by the cathode as the energy is consumed. This helps increase energy density, one of the key reasons aluminum batteries are a more cost-effective and environmentally friendly solution than lithium-ion batteries, which have grown in popularity with the advent of electric cars.

And while such batteries can be recycled, it is “not yet a universally well-established practice,’’ as Linda L. Gaines of Argonne National Laboratory told Chemical & Engineering News. The recycling rate of lithium-ion batteries in the U.S. (and the European Union) is about five percent, according to that same outlet, and it is projected that there will be two million metric tons of such waste per year by 2030.

That is a potentially staggering problem, and a far greater one than that which is presented by traditional alkaline batteries, which were hazardous to the environment when they contained mercury but now only contain elements that naturally occur in the environment, like zinc, cadmium, manganese and copper.

Aluminum batteries, then, are a safe alternative to lithium-ion batteries. They are also safer in another way, as they tend to exhibit lower flammability. The inertness property of the element and the ease of handling in ambient conditions also help in this regard.

Another benefit is that by gradually replacing lithium-ion batteries with their aluminum counterparts, lithium mining — a process that results in toxic chemicals leaking into the environment, leading to large-scale contamination of air, water, and land — can be curtailed. Aluminum batteries are also a viable replacement for cobalt-based batteries, which carry with them their own environmental concerns.

Lithium mining also tends to use a ton of water, roughly 500,000 gallons to extract a ton of lithium. While mining bauxite, the raw material from which aluminum is refined, also involves the use of water and energy, the resource requirements are less.

That said, aluminum batteries are yet to be the perfect alternative to other less sustainable types of batteries being used today. Compared to lithium, they are only half as energy-dense. Scientists and researchers are looking for ways to improve the electrolyte mix and charging mechanisms.

Overall though, aluminum is a significantly more practical charge-carrier when compared to lithium, due to its multivalent property. This means that every ion can be exchanged for three electrons, thus allowing up to three times greater energy density.

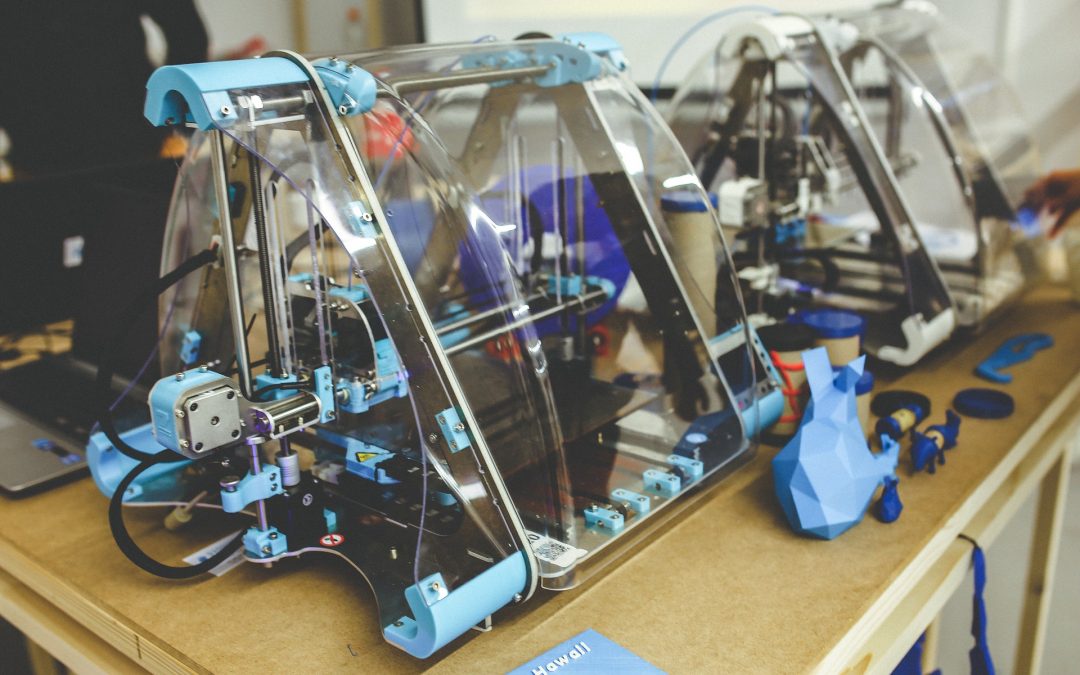

Can 3D Printing Help Solve the World’s Housing Crisis?

A 3D printer is being used to build a house in Italy, and while the method is not entirely new, the material being used in its construction is: locally sourced clay.

Mario Cucinella, head of his eponymous architectural firm based in Italy, designed this prototypical structure, which is being erected near Bologna and is the first 3D home completely comprised of natural materials. The house is not overly large, consisting of a living room, bedroom and bathroom, but it is recyclable and biodegradable. It is also one more hint that 3D printing can help address Earth’s staggering housing problem.

As of 2019, 150 million people around the globe — i.e., two percent of the world’s population — were homeless. And 1.6 billion people, or 20 percent of the world’s population, lack adequate housing. The shortage is so acute that fully addressing it will likely involve building 100,000 new houses every day for the next 15 years, according to the United Nations.

Building 3D dwellings could, at least, be part of the solution, in that they are inexpensive, sustainable and easy to erect. And Cucinella’s creation is the latest step in this housing trend, which has gathered momentum in recent years. The clay of which it is composed is extruded through a pipe and set by a 3D printer known as a Crane WASP, which according to artists’ renderings results in a layered, conical look.

Construction began in the fall of 2019, and before the coronavirus pandemic was expected to be completed in early 2020.

Prior to this, concrete was the most commonly used material in 3D-printed buildings. That was true when a house was built in China in 2016, and when an office building was erected in Dubai that same year. It was true in Russia in 2017, the U.S. (specifically Texas) in 2018, Mexico in 2019 and the Czech Republic in 2020.

There were two exceptions. One was a rudimentary structure made of clay and straw in Italy in 2016, the other a tiny cabin made of bioplastic in The Netherlands in 2016. The latter was designed specifically to be used as a temporary dwelling in areas where natural disasters occur.

Certainly that’s possible with some of the other 3D dwellings as well. Those built in Russia and the U.S. were, for instance, erected in a single day. But the more common usage is expected to be as permanent dwellings for those living in areas where there is a dearth of suitable housing.

Consider the technology startup Icon and the housing nonprofit New Story, which built the aforementioned house in Texas. They are also the ones who in 2019 began construction on a village that will eventually consist of 50 such structures in Mexico, in an area of that nation where the median monthly income is $76.50. The idea is to offer these houses, which sell for $4,000 in developing countries, for $20 a month over seven years. The remaining cost will be covered by subsidies from New Story and private donations.

Icon and New Story are also planning villages elsewhere in Mexico, as well as in such nations as Haiti and El Salvador.

The world’s housing problem, daunting as it is, will not be solved by any single method, but 3D printing offers a partial solution.

The First Step in Graphene Air Filters

Laser-induced graphene (LIG) air filters, developed in 2019 by a team at Rice University, have future implications for medical facilities, where patients face the constant threat posed by air-borne pathogens.

The coronavirus, which is spread by respiratory droplets, has offered sobering proof of the havoc such diseases can wreak, but it is far from the only risk these patients might face. In all, one of every 31 of them will contract an infection while hospitalized, a rate that is only expected to increase. Dr. James Tour, the chemist who headed the Rice team, noted that scientists have predicted that by 2050, 10 million people will die every year as a result of drug-resistant bacteria.

As Tour also told SciTechDaily, “The world has long needed some approach to mitigate the airborne transfer of pathogens and their related deleterious products. This LIG air filter could be an important piece in that defense.”

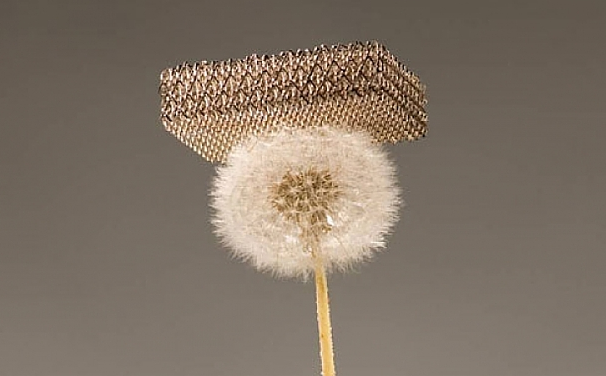

Tour and his team developed a self-sterilizing LIG filter that can trap pathogens and eradicate them with electrical pulses. Those pathogens (bacteria, fungi, spores, etc.) might be carried by droplets, aerosols or particulate matter, but testing showed that LIG — a porous, conductive graphene foam — was equal to the task of halting them. The electrical pulses then heated the LIG up to 662 degrees for an instant, destroying the pathogens.

The self-sterilizing feature could result in filters that in the estimation of Tour’s team last longer than those currently used in HVAC systems within hospitals and other medical facilities. They might also be used in commercial aircraft, he told Medical News Today.

Israel’s Ben-Gurion University of the Negev has taken LIG technology a step further and applied it to surgical masks. Because graphene is resistant to bacteria and viruses, such masks would provide the wearer with “a higher level of protection,” as the mask’s inventor, Dr. Chris Arnusch, told Medical Xpress.

Because of its antibacterial properties, graphene can be used in medical and everyday wearables. Manufacturers have also taken advantage of its strength, flexibility and conductivity to integrate it in a wide range of products, from touch screens to watches to light jackets.

As for LIG, it is a process discovered in Tour’s lab in 2014, and involves heating the surface of a polyimide sheet with a laser cutter to form thin carbon sheets. LIG also has many uses, whether in electronics, as a means of water filtration or in composites that can be used in building materials, automotive components, body armor, sports equipment or aerospace components.

Arnusch, who serves as senior lecturer and researcher at the BGU Zuckerberg Institute for Water Research (a branch of the Jacob Blaustein Institutes for Desert Research), cited water filtration as the inspiration for his work on surgical masks.

But because COVID-19 has offered such a grim reminder about the dangers of airborne illness, the air filters have garnered as much attention as any graphene-related application at present. It is compelling evidence of just how versatile (and valuable) graphene can be, and of what it might mean for our safety, and our future.

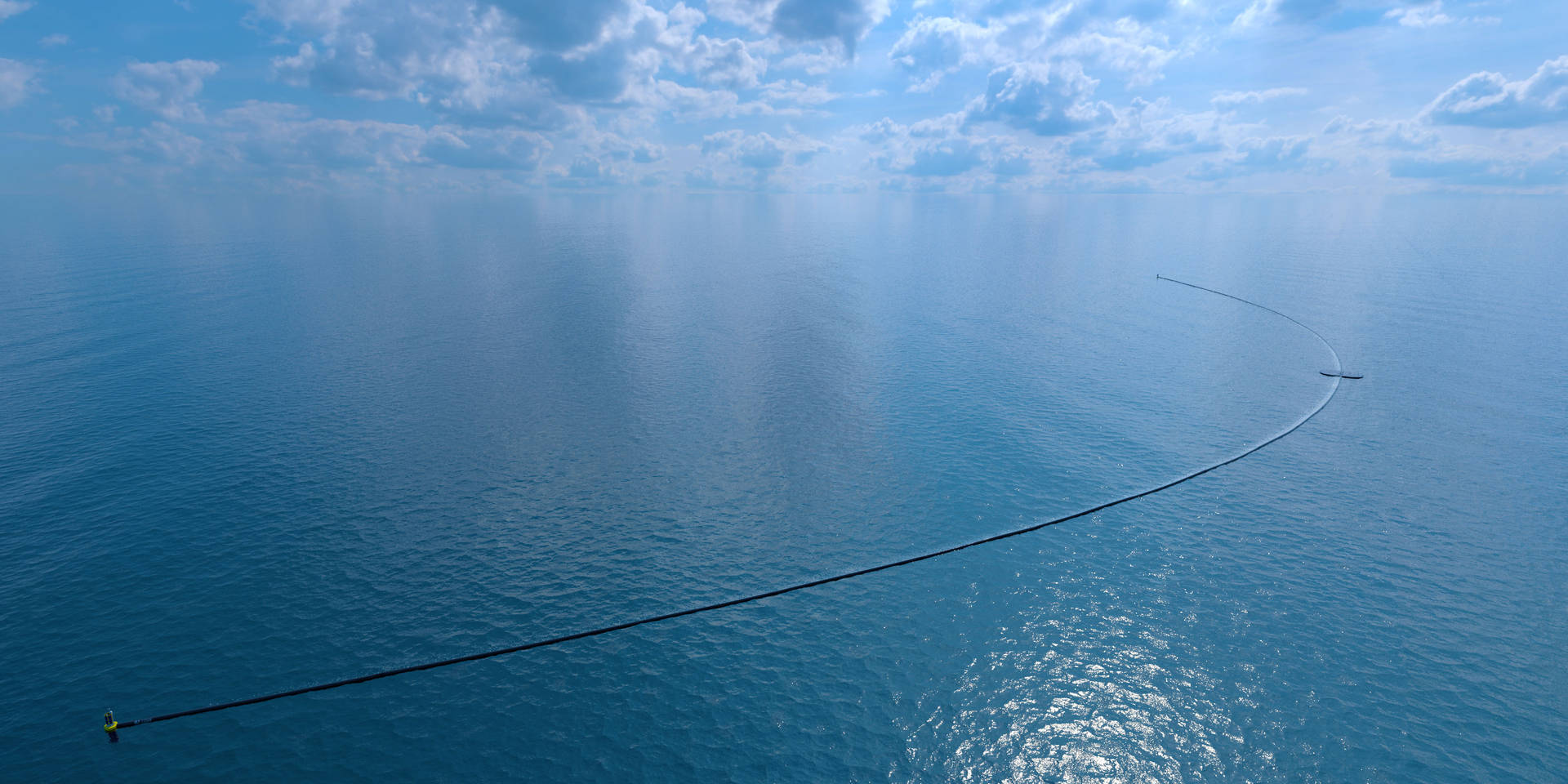

How Do We Finally Clean Up Our Polluted Oceans? This Company May Have an Answer

The Great Pacific Garbage Patch (GPGP) — alternatively known as the Pacific trash vortex — is an area in the Pacific Ocean teeming with plastic pollution. It’s one of the largest accumulations of plastic among the world’s oceans, and studies indicate it continues to exponentially increase in size. Sadly, it’s only one of countless trash dumps throughout the world’s oceans, rivers and waterways.

Enter the Ocean Cleanup, a Netherlands-based nonprofit environmental protection organization determined to remove this debris with new technology. The effort calls for a mix of using innovative trash removal technology and a public awareness campaign to reduce waste. Here’s a look at this ambitious project to clean and protect the earth’s water supply.

What Is The Ocean Cleanup?

The Ocean Cleanup aims to remove plastic pollution in both oceans and rivers on a massive scale. A primary goal beyond the original purpose of cleaning oceans is to clean the 1,000 most polluting rivers, which account for an estimated 80 percent of plastic pollution in oceans, according to the Maritime Executive.

CEO Boyan Slat, who founded The Ocean Cleanup in 2013, believes the organization needs to create solutions to prevent plastic from entering water systems in the first place. The Ocean Cleanup announced a refined model of its floating device Interceptor in October 2019 to resolve both cleanup and prevention. So far the organization has installed four Interceptors in different ocean locations: Indonesia (Jakarta), Malaysia (Klang), Vietnam (Mekong Delta) and Dominican Republic (Santo Domingo). Additionally, Thailand and Los Angeles County are exploring similar possibilities.

The Ocean Cleanup is mainly funded by donations and sponsors such as Salesforce CEO Marc Benioff and PayPal co-founder Peter Thiel. A 2014 crowdfunding campaign generated over $2 million and by November 2019 the organization had raised over $35 million. Its first ocean cleanup system was deployed in September 2018. A more refined version called System 001/B a year later has shown to be successful at collecting debris.

Interceptor Technology

The Interceptor is a solar-powered boat designed to remove over 50,000 kg of trash per day. The device is equipped with lithium-ion batteries that store and provide 24/7 energy. It’s an eco-friendly machine that doesn’t create noise or exhaust, nor is it harmful to marine life. By anchoring to a river, this device — which doesn’t interfere with other vessels — is able to capture floating debris. The system is connected with a computer to monitor data on collection, energy performance and health of electronic components.

The key to the Interceptor collecting trash is the use of several conveyor belts that scoop up debris and place it in onboard dumpsters. The waste is then transported to a local waste management facility. Deploying a fleet of these automated trash collectors can remove an enormous amount of plastic in a short time. One Interceptor is capable of removing up to 110,000 pounds of plastic per day.

Slat hopes to cut 90 percent of the plastic trash in our world’s oceans by 2040. The solution will involve mass producing the Interceptor to be used in different parts of the world, and scaling up the project with bigger fleets of up to 60 devices.

A crucial area targeted for ocean cleanup is the North Pacific Subtropical Gyre between Hawaii and the continental United States. This area is about the size of Alaska, comprising about 79,000 tons of plastic pollution, including tiny fragments smaller than 5mm in length. Thankfully, after years of trial and error, a more streamlined Interceptor model was developed in 2019 that can hold both plastics and microplastics. With that advancement, Slat is confident his vision for mass cleanup is attainable.

How blockchain can help secure 3D printing

3D printing is an advanced technology that many people in and outside of the tech world are already highly familiar with. In a nutshell, 3D printing is the process of using a 3D printer to create a computer-generated three-dimensional object. This is done by adding layer upon layer of material to an object, a process that is often referred to as additive manufacturing. As for as blockchain is concerned, it is an equally advanced yet much different type of technology. Blockchain is a system of records and transactions secured by advanced cryptography. By regulating 3D printing with blockchain it is thought that the technology would be far safer in the hands of the general public.

3D Printing’s Inherent Benefits and Risks

In the coming decades, 3D printing technology is guaranteed to revolutionize life as we know it. Anyone will be able to turn their garage into a micro-manufacturing facility. The healthcare industry will be able to produce replacement organs made out of real human tissue. Essentially, anyone will be able to create anything at any time.

With the massive freedom that 3D printing will provide humanity, an equal number of risks will present themselves. Using 3D printers to easily produce powerful weapons, bombs, counterfeit items, and other malicious objects will be one of those risks. Governments and technology experts around the world are already looking for ways to preempt the risks posed by 3D printing technology. Using blockchain as a regulating system is being looked at as the primary solution.

Ways That Blockchain Can Help Secure 3D Printing

No Guns – 3D printers around the world have already been used to create homemade guns. Using 3D printers to produce projectile weapons is a major risk posed by the technology. Thankfully, blockchain regulated 3D printing devices could be hardcoded in a manner that would make it impossible to produce any type of weapon with the device. Blockchain could also immediately alert the authorities if someone did attempt to create an unauthorized weapon with the device.

Intellectual Property Protection – 3D printing is going to make it easier than ever before to infringe upon intellectual property rights. For example, a branded product that took millions of dollars and years to develop could be easily mass-produced in someone’s garage for the cost of raw materials using a 3D printing device. It is thought that blockchain will be able to prohibit 3D printer users from infringing upon the intellectual property rights of others.

Taxation Enforcement – 3D printing technology will make it easier than ever before to produce black market goods on an industrial scale. This would prevent taxation bodies from collecting taxes owed on any underground commercial endeavors. It is thought that blockchain will be able to keep 100 percent accurate records on goods produced in order to alert government officials to underground commercial activities.

Secure 3D Bioprinting – It is not a question of if, but a question of when will 3D bioprinting be used in mass to produce replacement organs and body parts for human beings. Once this technology is used on a wide scale it will need to be secured by an ironclad system. With blockchain’s near-impenetrable cryptography-based core, it is likely to be the go-to security used for all future bioprinting technology.

Preventing Counterfeits – Advanced 3D printing technology of the future will be able to easily produce counterfeit IDs, counterfeit money, counterfeit credit cards, and any other type of counterfeit object. By using blockchain regulators on the 3D printing device and upstream at the internet provider level, it will be very difficult for home-based users to create counterfeit goods without local police being swiftly alerted.

From CO2 to Coal: Turning Back the Clock

With climate change at the forefront of most environmental policies and initiatives, scientists and researchers are scrambling to figure out a way to address the problem. Let’s face it, the earth is getting warmer at an alarming rate. The current warming trajectory means that parts of the world will be faced with constant weather-borne catastrophes in the coming decades. Over the much longer term, global warming could cause countless animal extinctions and even threaten the very existence of mankind itself. Thankfully, several leading solutions are being developed that could rewind the CO2 emissions clock. While scrubbing carbon dioxide from the air might seem impossible, it is very close to becoming a reality.

Carbon Capture and Storage

Some of the world’s leading scientists have developed an anti-emissions technology that is being called carbon capture and storage. In a nutshell, the technology is able to draw carbon dioxide from the earth’s atmosphere and turn it into raw usable coal. Once the coal is created it is able to be safely stored in huge underground storage facilities that present no contamination risk to the greater environment. Thus far the carbon capture and storage technology has only been used on a small scale, however, the processes driving the technology are thought to be fully scalable to an industrial level.

How Does Carbon Capture and Storage Work?

An international group of researchers and scientists operating out of RMIT University in Australia have been able to create a fully functional electrocatalyst made out of liquid metal. This electrocatalyst is able to pull carbon dioxide out of the air and turn it into a solid coal-based matter through room temperature reactions. The solid carbon is then auto-transported to a large underground silo by the filtration system’s built-in solid carbon transport system. This technology scaled out has the potential to rewind the earth’s carbon clock.

How Scalable is It?

The carbon capture and storage technology was devised from the get-go with scalability in mind. The team of scientists that devised the technology claim that the technology is scalable on an industrial level. In fact, unless the technology is scaled out in mass on globally, it would only be able to remove a marginal amount of carbon dioxide from the atmosphere. Placing a large scale carbon capture and storage center in every major city on earth could help reduce carbon emissions by a double-digit percentage globally.

Could Carbon Capture and Storage Cure Global Warming?

Experts that have closely studied the carbon capture and storage technology believe that it could be a key component towards finding a cure for global warming. While the technology alone could not offer a solitary cure, if it is used in coordination with other initiatives it could likely help to dramatically reduce carbon dioxide in the atmosphere. The percentage of carbon dioxide that the technology is capable of realistically reducing could be a double-digit percentage if processing centers were widespread and built to industrial scale. These processing centers would also need to be strategically located in places where carbon emissions are the highest.

Does The Technology Pose Any Risks?

The carbon capture and storage technology does not pose any primary risks, as the process is clean and pollution-free. It does, however, present a serious secondary risk. Due to the fact that carbon capture and storage technology is able to mass-produce raw coal that would be usable as a fossil fuel energy, there would always be a temptation to use this coal to create dirty and non-green energy. Laws and regulations, however, could be put into place that prohibit coal produced by carbon capture and storage technology to be used to produce further greenhouse emissions.

Engineering “Super Coral”

In Townsville, Australia at the National Sea Simulator, researchers gather to watch the first bits of egg and sperm float away from the coral reefs they have been observing. Madeleine van Oppen, a coral geneticist, gathers her team ready of the spawning as one of the species of coral spawning more quickly than anticipated. She and her team had to move fast, as it is imperative to prevent it from crossbreeding with the other coral in the tank. Van Oppen and her team are attempting to create new breeds of coral that can withstand the intense marine heating that has killed over half of Australia’s Great Barrier Reef. Global temperatures around the world are rising and destroying the reef in every ocean. Australia has committed 300 million for research in efforts to preserve and restore coral reefs. In doing so, it has become a beacon for scientists devoted to reefs, primarily at the Sea Simulator.

The Australian Institute of Marine Science created the Simulator, in which dozens of tanks have been developed to replicate the conditions of today’s oceans as well as simulate projected future conditions. It is here that Van Oppen and her team are attempting to re-engineer the corals by any method that may be fruitful. They are using old methods such as domesticating the plants and trying out new technology like gene-editing tools. Following the examples of tech entrepreneurs for producing fast results, the team is quickly testing any new ideas and discarding those that hold no potential promise. Whole projects can be determined to kept or abandoned over a span of ten hours. The material essential to the core of this work, the genetic material of the coral is only released once a year. The scientists must move quickly to gather and test material or the egg will die with being fertilized by sperm, and they will have to wait another year for a further attempt.

Van Oppen and other scientists know they are working against a very crucial and unforgiving clock. Over the past decade, underwater heatwaves are decimating coral reefs in large numbers. By the time temperatures increase by 2 degrees Celsius, reefs will be gone from waters worldwide, and it is estimated to reach 3 degrees by the end of the century. The other threats facing the reef is the acidification of the oceans due to the pH being lowered by the absorption of Carbon Dioxide. The calcium carbonate shells of the corals and other marine life are vulnerable to the newly corrosive levels. Seven years ago, Oppen and Ruth Gates, a conservationist, and coral biologists began to wonder if there could possibly be away that could give coral some extra advantage to help them survive.

Coral Conservation thus far has focused on pollution, predators, fishers, and tourists, but not on something as radical as the duo had in mind. They advanced the idea of assisted evolution in a paper in 2015 from the National Academy of Sciences. In the same year, the charitable foundation of Paul Allen gave them funding for research. At this time, the research focuses on four main areas to help the coral: cross-breeding strains to hopefully create a heat resistant variant, using genetic engineering to alter coral and algae, rapidly evolving tougher strains by growing them quickly, and manipulating the microbiomes of the coral.

Introducing genetically engineered lifeforms into already existing ecosystems have raised many concerns in the scientific community. David Wachenfeld is chief scientist of the Great Barrier Reef Marine Park Authority compares the situation to cane toad incident in 1935. Then, the toads were introduced to Australia to combat sugarcane devouring beetles, but ended up showing no interest in the insects and wreaked havoc poisoning the surrounding wildlife. He fears that engineered coral could become predators to the existing reefs. In March, Van Oppen and her team were able to get permission to move the cross-breed hybrids to open ocean. It may be some time before any actual effects can be seen, but it is the only option the coral reefs have left for a chance to survive.

Blockchain Could Change The Way We Use Energy

Blockchain is widely regarded to be the next major technological breakthrough for mankind. In the not so distant future, it is thought that blockchain will run everything from internet-based transactions to more complex government-run systems and core infrastructure. For anyone unfamiliar with blockchain, it is a completely transparent, highly encrypted, and decentralized means of storing information and digital records. With blockchain possessing fewer security risks than more standardized digital systems, it is slowly becoming the preferred method of managing energy distribution and consumption. When it comes to energy management and distribution, blockchain is far safer, far more transparent for consumers, and far more efficient in how it maintains infrastructure.

Setting Up Smart Grids

Numerous start-up ventures have recently made it possible for consumer-driven smart grids. In a nutshell, these grids grant consumers a far more transparent level of access and control over where they source their energy. This, in turn, creates far more demand for clean and green energy sources, spurring further innovation in these lucrative sectors. For example, consumers can use a blockchain to ensure that their sole source of energy comes from wind, thus ensuring that all of the money they spend on energy goes completely to upstream wind farms.

Less Energy Market Manipulation

It has recently come to light that many of the more traditional fossil fuel-driven energy companies have used their market share for many decades to suppress cleaner energy products. The coal industry, for example, has gone out of their way to make it much harder for consumers to get access to cleaner green energy providers. With blockchain forcing transparency on to the energy game, the energy market will be scrutinized by end-users much more so than has even been the case. Fossil fuel companies that abuse their market power will receive massive backlashes under a blockchain-driven system.

Less Energy Waste

One of the primary benefits of using blockchain to manage energy infrastructure and consumption is that it operates far more efficiently than standard infrastructural systems. Routing energy through conventional means has been proven to be nearly 30 percent more wasteful than blockchain routing systems, due largely to increased blockchain energy routing controls. Standard energy routing systems often use age-old routing channels rather than more modern infrastructural channels. With greater control over how energy reaches the end-user and greater transparency over energy routes, far greater efficiency will be forced on to energy providers to clean up wasteful delivery systems.

Improved Energy Data Management

Another major incentive to use blockchain in energy use is that it offers increased data management. Due to blockchain’s increased core transparency, consumers will have greater access to current market energy prices, energy marginal costs, energy taxes, and energy law compliance factors. The non-blockchain energy conglomerates are known to heavily manipulate the data that is passed on to end-consumers. They also intentionally omit certain data sets in order to prevent consumers from knowing too much. With blockchain-driven energy systems, the age-old energy industry of hiding data will simply no longer be possible.

Solar Will Be Moving Fast