In 2011 venture capitalist Marc Andreessen correctly predicted that software and online services would soon take over large sectors of the economy. In 2016 we can expect to see software again revolutionizing the economy, this time by eating the storage world. Enterprises that embrace this new storage model will have a much easier time of riding the big data wave. It’s no secret that data is the new king. From the rise of big data to Artificial Intelligence to analytics to machine learning, data is in the driver’s seat. But where we’ve come up short so far is in managing, storing, and processing this tidal wave of information. Without a new method of storing this data so that it’s easy to sort, access, and analyze, we’ll get crushed by the very wave that’s supposed to carry us to better business practices. Storage’s old standby, the hardware stack, is no longer the asset it once was. In the age of big data, housing data on hardware is a limitation. Instead, a new method is emerging that allows for smarter storing, collecting, manipulating and combining of data by relying less on hardware and more on—you guessed it—software. But not just any old software. What sets Intelligent Software Designed Storage (I-SDS) apart is that its computational model moves away from John von Neumann’s longstanding model towards a design that mimics how the human brain processes vast amounts of data on a regular basis. After all, we’ve been computing big data in our heads our entire lives. We don’t need to store data just to store it—we need to have quick access to it on command. One such example of an I-SDS uses a unique clustering methodology based on the Golay Code, a linear error correcting code used in NASA’s deep space missions, among other applications. This allows big data streams to be clustered. Additionally, I-SDS implements a multi-layer multiprocessor conveyor so that continuous flow transformations can be made on the fly. Approximate search and the stream extraction of data combine to allow the processing of huge amounts of data, while simultaneously extracting the most frequent and appropriate outputs from the search. These techniques give I-SDS a huge advantage over obsolete storage models, because they team up to improve speed while still achieving high levels of accuracy.

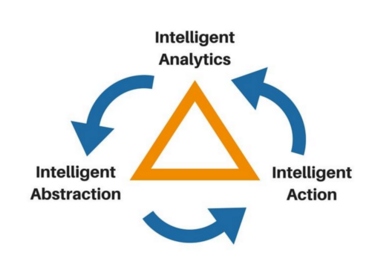

The key to successful I-SDS rests on three pillars:

1. Abstraction

The ability to seamlessly integrate outdated legacy systems, current systems, future unknown systems and even component level technologies is the hallmark of an SDS with a rich abstraction layer. This allows for a rich set of data services to act upon data with high reliability and availability. It also fosters policy and access control that provides the mechanisms for resource trade-offs and enforcement of corporate policies and procedures. SDS also supports the non-disruptive expansion of capacity and capability, geographic diversity and self-service models. Lastly, abstraction allows for capabilities to incorporate the growing public/private cloud hybrid infrastructures and to optimize their usage.

2. Analytics

Analytics has become the new currency of companies. Tableau (NYSE: DATA) and Splunk (NASDAQ: SPLK) have shown the broad desire for analytic and visualization tools that do not require trained programmers. These tools made analytics and visualization available to a broad class of users in the enterprise. User experience is a key component. Simplicity with power. Cloud and mobile accessibility ensure data is available, scalable and usable anywhere and anytime. Cloud brings scale in numerous dimensions – data size, computing horsepower, accessibility, and scalability. Multi tenant with role based security and access allow the analytics and visualization to be made available to a broad set of enterprise (and partner) stakeholders. This broad set increases the collective intelligence of the system. Cloud systems that are heterogeneous and multi-tenant allow analytics that cross systems and vendors, and in some cases, customer boundaries. This increases the data set rapidly by potentially creating a much faster and more relevant set of results.

3. Action

Intelligent Action is built on the creation of full API based interfaces. Making available APIs allows extension of capabilities and the application of resources. Closed monolithic systems from existing and upstart vendors basically say, “give me your data and as long as it’s only my system I will try and do the right optimization.” Applications and large data sets are complex; it is highly unlikely that over the 10 year life of a system that an Enterprise will not deploy many different capabilities from numerous vendors. An Enterprise may wish to optimize along many parameters outside a monolithic systems understanding, such as cost of the network layer, standard deviation of response time, and percentage of workload in a public cloud. Furthermore, the lack of fine-grained controls over items like caching policy, data reduction methods make it extremely difficult to balance the needs of multiple applications in an infrastructure. Intelligent Action requires a rich programmatic way – a set of fine grained API’s – that the I-SDS can use to optimize across the data center from application to component layer.

Into the Future

This type of rich capability is what underlies the private clouds of Facebook, Google, Apple, Amazon, and Alibaba. ISDS will allow the Enterprise to achieve the scale, cost reduction and flexibility of these leading global infrastructures. I-SDS allows the enterprise to maintain corporate integrity and control its precious data.The time has come for software to eat the storage world, and enterprises that embrace this change will come out on top.